Alibaba’s most advanced vision-language model, Qwen3-VL 235B, is now accessible via Ollama’s cloud infrastructure, signalling a milestone in multimodal AI deployment. The dense and sparse variants of the Qwen3 family—4B, 8B, 30B and 235B—are slated to become available for local use in due course.

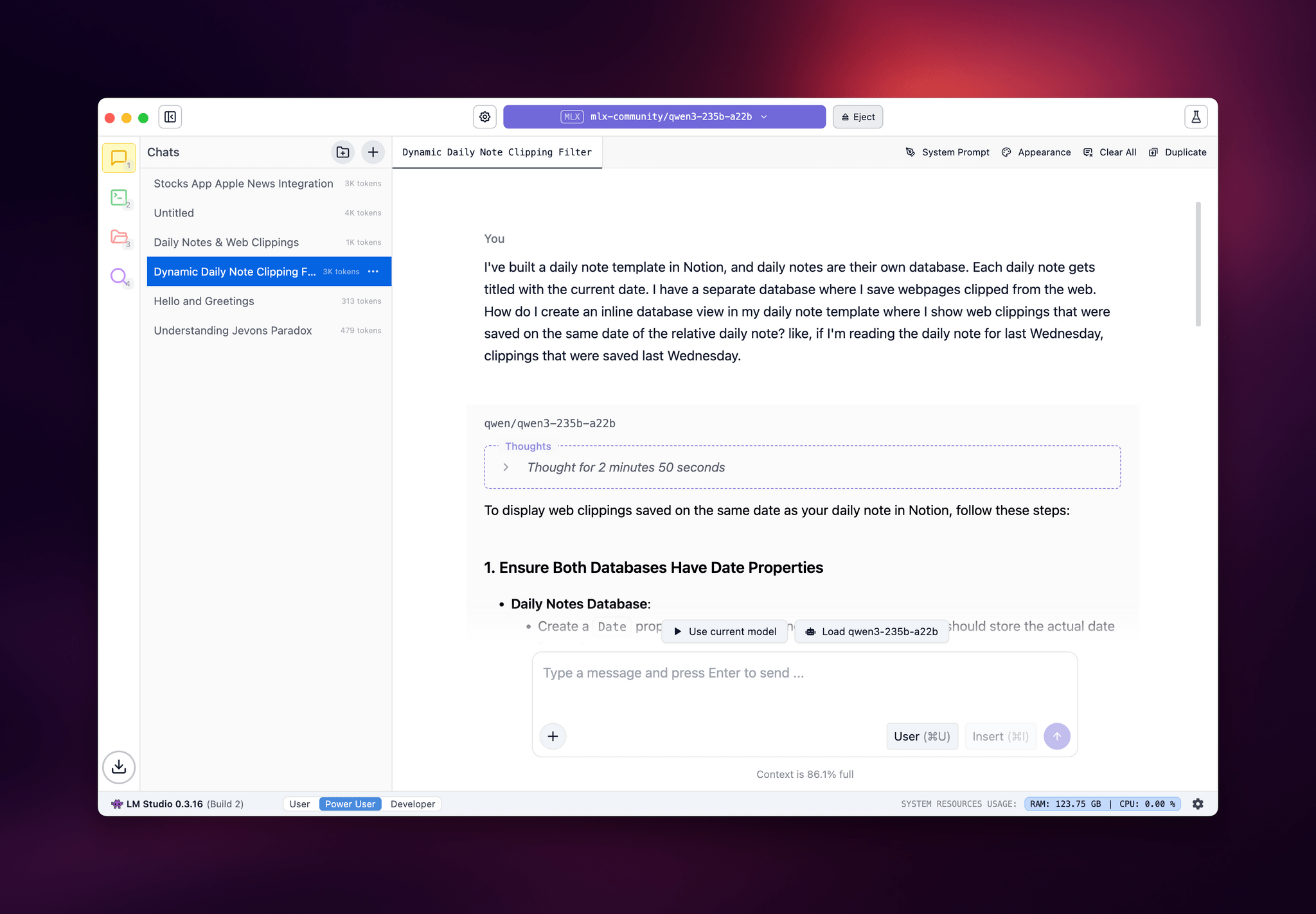

Qwen3-VL elevates visual reasoning capabilities: it can interpret graphical interfaces, recognise GUI elements, press buttons, call external tools and complete tasks autonomously. Its core strength lies in combining visual and textual reasoning, matching the language performance of the flagship Qwen3-235B-A22B model. Ollama now supports the “qwen3-vl:235b-cloud” command to run it in the cloud environment.

In its cloud-deployed form, Qwen3-VL enables developers and researchers to experiment with its full capacity immediately. Ollama’s roadmap indicates that local versions of the VL models will follow shortly, allowing on-device inference outside cloud infrastructure.

The architectural innovations in Qwen3-VL reflect advances in multimodal integration. From shared pretraining of vision and text modalities to an enlarged context window and refined spatial reasoning, the model is engineered to tackle tasks involving visual understanding and actions. Benchmark results suggest it delivers top global performance in challenges such as OS World, particularly when integrating tool use.

Qwen3-VL’s arrival comes as the broader Qwen3 suite continues gaining traction. Earlier, Alibaba released Qwen3-235B-A22B with a mixture-of-experts structure: 235 billion total parameters with 22 billion activated, alongside other dense model variants. Quantisation studies show that moderate reductions in bit precision retain strong performance, though ultra-low quantisation induces accuracy loss.

Technologists working on model deployment have already explored running Qwen3-235B in cloud GPU environments using Ollama and vLLM, noting the hardware demands. Meanwhile, research groups are pushing mobile-friendly multimodal models like AndesVL, built on Qwen3 foundations, targeting edge devices with 0.6B to 4B parameters.

In the broader AI landscape, Qwen3-VL’s launch positions it among a growing cohort of state-of-the-art visual language models. The Qwen3-Omni model recently achieved leading performance across 36 audio and audio-visual benchmarks, indicating Alibaba’s ambition in comprehensive multimodal modelling. Other open frameworks such as LLaVA-OneVision-1.5 are emerging concurrently, emphasising efficiency and accessibility in multimodal training.

One potential constraint lies in infrastructure requirements: cloud deployment conceals the enormous compute costs, while local deployment demands substantial memory and GPU resources. Quantisation strategies may help mitigate this, but trade-offs in model fidelity remain. Engineering efforts will need to balance these performance versus resource constraints as the VL models move to edge settings.